Arista Flattens Networks for Large Enterprises with Splines

Arista Networks, which boasts serial entrepreneur Andy Bechtolsheim as its chief development officer and chairman, has been selling products for five years against Cisco Systems, Juniper Networks, Mellanox Technologies, and others in the networking arena. Its largest customer has 200,000 servers connected on a single network. So Arista can hardly be thought of as a startup. But the company is still an upstart, and is launching a new set of switches that deliver an absolutely flat network, called a spline, to customers who need moderate scale, simplicity, and low cost.

Arista has actually trademarked the term "spline" to keep others from using it as they have the leaf-spine naming convention that Arista came up with for its original products back in 2008. A spline network is aimed at the low-end of extreme scale computing, to just over 2,000 server nodes, but it is going to provide some significant advantages over lead-spine networks, Anshul Sadana, senior vice president of customer engineering, explains to EnterpriseTech.

The innovation five years ago with leaf-spine networks, which other switch vendors now mimic, is to create a Layer 2 network that only has two tiers, basically merging the core and aggregation layers in a more traditional network setup into a spine with top of rack switches being the leaves. This cuts costs, but more importantly, a leaf-spine network is architected to handle a substantial amount of traffic between interconnected server nodes (what is called east-west traffic), while a three-tier network is really architected to connect a wide variety of devices out to the Internet or an internal network (which is called north-south traffic). Arista correctly saw that clouds, high frequency trading networks, and other kinds of compute clusters were going to have far more east-west traffic than north-south traffic.

With a leaf-spine network, every server on the network is exactly the same distance away from all other servers – three port hops, to be precise. The benefit of this architecture is that you can just add more spines and leaves as you expand the cluster and you don't have to do any recabling. You also get more predictable latency between the nodes.

But not everyone needs to link 100,000 servers together, which a leaf-spine setup can do using Arista switches. And just to be clear, that particular 200,000 node network mentioned above has 10 Gb/sec ports down from the top-of-rack switches to the servers and 40 Gb/sec uplinks to the spine switches, and to scale beyond 100,000 nodes, Arista had to create a spine of spine switches based on its 40 Gb/sec devices. Sadana says that there are only about four such customers in the world that build such large networks; they are very much the exception, not the rule.

Arista has over 2,000 customers, with its initial customers coming from the financial services sector, followed by public cloud builders and service providers, Web 2.0 application providers, and supercomputing clusters. Among cloud builders, service providers, and very large enterprises, Sadana says that somewhere between 150 and 200 racks is a common network size, with 40 server nodes per rack being typical among the Arista customer base. That works out to 6,000 to 8,000 servers.

"And then you move one level down, and you hit the large enterprises that have many small clusters, or a medium-sized company where the entire datacenter has 2,000 servers," Sadana explains. "Many companies have been doing three-tier networks, but only now are we seeing enterprises migrate to 10 Gb/sec Ethernet and as part of that migration they are using a new network architecture. We have seen networks with between 200 to 2,000 servers among large enterprises, with 500 servers in a cluster being a very common design. These enterprises have many more servers in total – often 50,000 to 100,000 machines – but each cluster has to be separate for compliance reasons."

This segregation of networks among enterprises was one of the main motivations behind the development of that new one-tier spline network architecture and a set of new Ethernet switches that deliver it. And by the way, you can build more scalable two-tier leaf-spine networks out of these new Arista switches if you so choose.

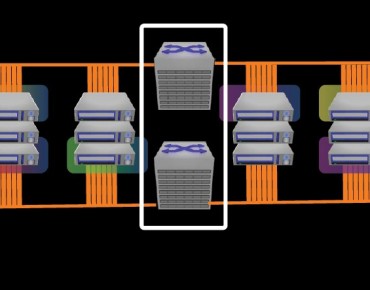

With the spline setup, you put two redundant switches in the middle of the row and you link each server to those switches out of two server ports. The multiple connections are just for high availability. The important thing is that each server is precisely one hop from any other server on the network.

You also don't have stranded ports with a spline network. Large enterprises that often have a mix of fat and skinny nodes in their racks, and they typically have somewhere between 24 and 32 servers per rack. If you put a 48-port top-of-rack switch in to connect those machines up to a spine, you will have anywhere from 16 to 24 ports on that switch that will never be used. These are stranded ports, and they are an abomination before the eyes of the chief financial officer. What the CFO is going to love is that a spline network takes fewer switches and cables than either a three-tier or two-tier network, and basically you can get 10 Gb/sec connectivity for the price of 1 Gb/sec Ethernet switching. Here's how Arista is doing the math comparing a one-tier spline network for 1,000 servers to a three-tier network based on Cisco gear and its own leaf-spine setup:

The power draw on the new Arista 7250X and 7300X switches is also 25 percent lower per 10 Gb/sec port than on the existing machines in the Arista line, says Sadana. This will matter to a lot of shops working in confined spaces and on tight electrical and thermal budgets.

The original 64-port 1 Gb/sec low latency 7050 switch that put Arista on the map five years ago was based on Broadcom's Trident+ network ASIC, and its follow-on 40 Gb/sec model used Broadcom's Trident-II ASIC. The 7150 switches used Intel's Fulcrum Bali and Alta ASICs. The new 7250X and 7300X switches put multiple Trident-II ASICs into a single switch to boost the throughput and port scalability. All of the switches run Arista's EOS network operating system, which is a hardened variant of the Linux operating system designed just for switching.

At the low-end of the new switch lineup from Arista is the 7250QX-64, which has multiple Trident-II chips for Layer 2 and Layer 3 switching as well as a quad-core Xeon processor with 8 GB of its own memory for running network applications inside of the switch. The 7250QX-64, as the name suggests, has 64 40 Gb/sec ports and uses QSFP+ cabling. You can use cable splitters to make it into a switch that supports 256 10 Gb/sec switches. This device has 5 Tb/sec of aggregate switching throughput and can handle up to 3.84 billion packets per second. Depending on the packet sizes, port-to-port latencies range from 550 nanoseconds to 1.8 microseconds, and the power draw under typical loads is under 14 watts per 40 Gb/sec port. The switch supports VXLAN Layer 3 overlays and it also supports OpenFlow protocols, so its control plane can be managed by an external SDN controller. The 7250QX-64 has 4 GB of flash storage and a 100 GB solid state drive for the switch functions as well as a 48 MB packet buffer.

The 7250QX-64 is orderable now and will be shipping in December; it costs $96,000. That works out to $1,500 per 40 Gb/sec port or $375 per 10 Gb/sec port if you use splitter cables. (That does not include the cost of the splitter cable.)

If you want to build a leaf-spine network from the 7250QX-64 and use cable splitters to convert it into a 10 Gb/sec switch, you can have up to 49,152 server nodes linked. That configuration assumes you have 64 spine switches and 256 leaf switches and a 3:1 oversubscription level on the bandwidth. Sadana says that the typical application in the datacenter is only pushing 2 Gb/sec to 3 Gb/sec of bandwidth, so there is enough bandwidth in a 10 Gb/sec port to not worry about oversubscription too much. If you wanted to use the top-end 7508E modular switch as the spine and the 7250QX-64 as the leaves, you can support up to 221,184 ports running at 10 Gb/sec with the same 3:1 bandwidth oversubscription. This network has 64 spines and 1,152 leaves.

The 7300X modular switches, also based on multiple Trident-II ASICs, come in three chassis sizes and support three different line cards. These are used for larger spline networks, and to get to the 2,048 server count, you need to top-end 7300X chassis. Here are the enclosure speeds and feeds:

And here are the line cards that can be slotted into the enclosures:

Here's the bottom line. The 7300X tops out at over 40 Tb/sec of aggregate switching bandwidth, with up to 2.56 Tb/sec per line card, and can handle up to 30 billion packets per second. It can have up to 2,048 10 Gb/sec ports or 512 40 Gb/sec ports, and latencies are again below 2 microseconds on port-to-port hops.

The 7300X series can be ordered now and will ship in the first quarter of 2014. It costs $500 per 10 Gb/sec port or just under $2,000 per 40 Gb/sec port for the various configurations of the machine, according to Sadana. The top-end 7500E switch, which was chosen by BP for its 2.2 petaflops cluster for seismic simulations, costs around $3,000 per 40 Gb/sec port, by the way. This beast supports more protocols and has more bandwidth per slot that the 7300X. The 7500E also has 100 Gb/sec line cards and the 7300X does not have them – yet. But Sadana says they are in the works.